When it comes to optimising your Shopify store for search engines, one often-overlooked file plays a crucial role: robots.txt. This simple text file controls how search engine crawlers interact with your site, allowing you to dictate which pages are indexed and which are ignored. For Shopify store owners, understanding how to edit and manage this file can significantly impact your SEO strategy.

Robots.txt helps prevent search engines from crawling irrelevant or duplicate content, ensuring that your important pages get the attention they deserve. By editing this file, you can better control which parts of your store are visible to search engines, improving your site’s performance in search results.

Table of Contents

ToggleWhat is Robots.txt?

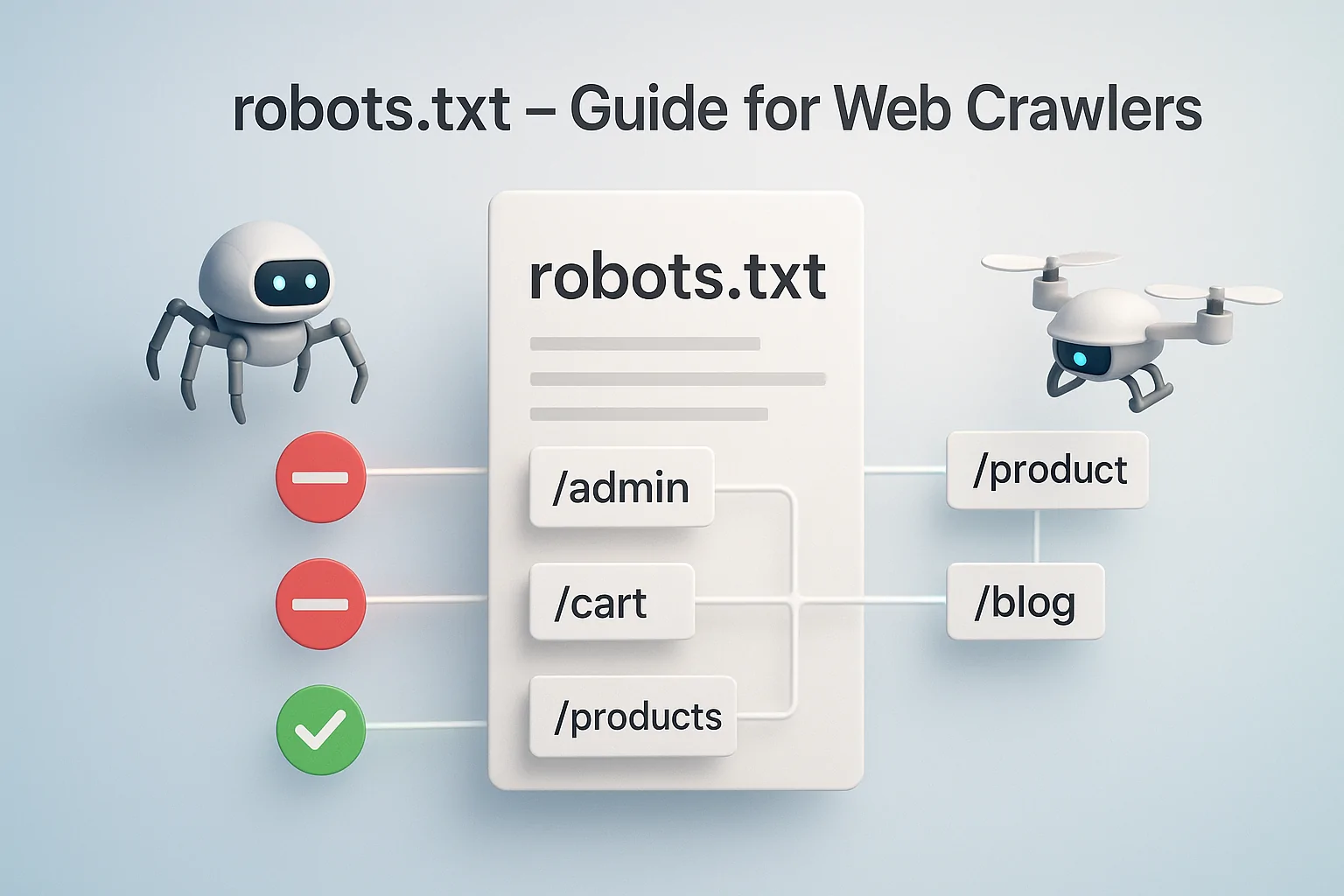

The robots.txt file is a simple text file in your website’s root directory. It instructs web crawlers (like Googlebot, Bingbot, etc.) which pages or sections of your site they should or should not crawl and index. This file helps search engines understand your site’s structure and prioritise which content to display in search results.

Search engines rely on the instructions in robots.txt to manage their crawling process. For example, you can block certain pages, such as admin sections, cart pages, or duplicate content, from being indexed while allowing key product pages and blog posts to be crawled.

How to Access robots.txt in Shopify

Accessing and editing your robots.txt file in Shopify is a straightforward process. Shopify provides a built-in feature that automatically generates and manages this file for your store. However, if you need to make custom changes, here’s how you can access it:

- Log in to your Shopify Admin

Start by logging into your Shopify account. Use your credentials to access the Shopify admin dashboard. - Navigate to Preferences

Once logged in, go to the Online Store section on the left sidebar. From there, click on Preferences. - Find robots.txt

Scroll down until you reach the robots.txt section within your store’s preferences. Shopify automatically generates this file for your store and will be available for viewing or editing. - Access the File

In this section, you can view the current robots.txt file or make changes as needed. You’ll be able to control which sections of your site are accessible to search engines.

Editing robots.txt in Shopify

Once you’ve accessed your robots.txt file in Shopify, editing it is simple. You can customise the instructions within the file to control how search engines crawl your website. Here’s how to make changes:

Identify the Section You Want to Edit

First, identify the section of your website you want to modify. Common uses for editing robots.txt include:

- Blocking search engines from crawling certain pages (e.g., cart, checkout, or admin).

- Allowing specific search engines to crawl particular pages or sections.

Edit the File

Shopify allows you to customise the instructions by adding lines to the robots.txt file. For example:

- To disallow a specific directory or page:

This code tells search engines not to crawl the cart page.

- To allow a specific page or section to be crawled, you can use the Allow directive:

- This allows search engines to crawl your blog section.

Save Changes

After making the necessary edits, save the file. Shopify will automatically update the robots.txt file with your changes. To apply the instructions correctly, check your changes by visiting your robots.txt URL (e.g., yourstorename.com/robots.txt).

Test for Errors

Before finalising the changes, testing whether the modifications work as expected is a good idea. You can use tools like Google Search Console to verify that the pages you want to block are not being crawled and that the pages you want indexed are accessible.

Common Mistakes to Avoid

While editing your robots.txt file in Shopify, it’s important to be cautious, as small errors can negatively impact your site’s visibility and SEO. Here are some common mistakes to avoid:

- Blocking Important Pages by Mistake

One of the most common errors is accidentally blocking important pages, such as your product pages or blog posts. If you block these pages from search engines, they won’t be indexed, which can severely affect your site’s ranking.

Example Mistake:

This would block all product pages, making them invisible to search engines.

- Not Testing Changes Before Implementing

Always test your robots.txt file after editing it. If you forget to check the file, you might unintentionally prevent important pages from being crawled, or worse, block your entire site from search engine visibility. - Overcomplicating the File

Keep your robots.txt file simple. Adding too many rules or using overly complex directives can confuse search engines. Stick to the basics, such as Disallow and Allow, and only use Crawl-delay or Sitemap if necessary. - Not Updating Regularly

Your store will evolve, as will the need to adjust your robots.txt file. It’s important to regularly update the file to reflect changes in your site structure, such as adding new sections or removing outdated content that should not be crawled. - Forgetting to Remove Temporary Blocks

If you temporarily block certain pages (e.g., during maintenance or while redesigning a page), remove the block once the work is done. Failing to remove temporary blocks can keep pages hidden from search engines for longer than necessary.

Conclusion

Editing your robots.txt file in Shopify is a powerful tool for controlling how search engines interact with your site. By properly configuring this file, you can ensure that search engines focus on the most important content, improving your SEO and protecting sensitive or irrelevant pages from being indexed. While it’s a relatively simple task, avoiding common mistakes and regularly updating the file to align with your store’s evolving needs is important. With these best practices, you can effectively manage your Shopify store’s SEO and maintain a healthy, optimised online presence.